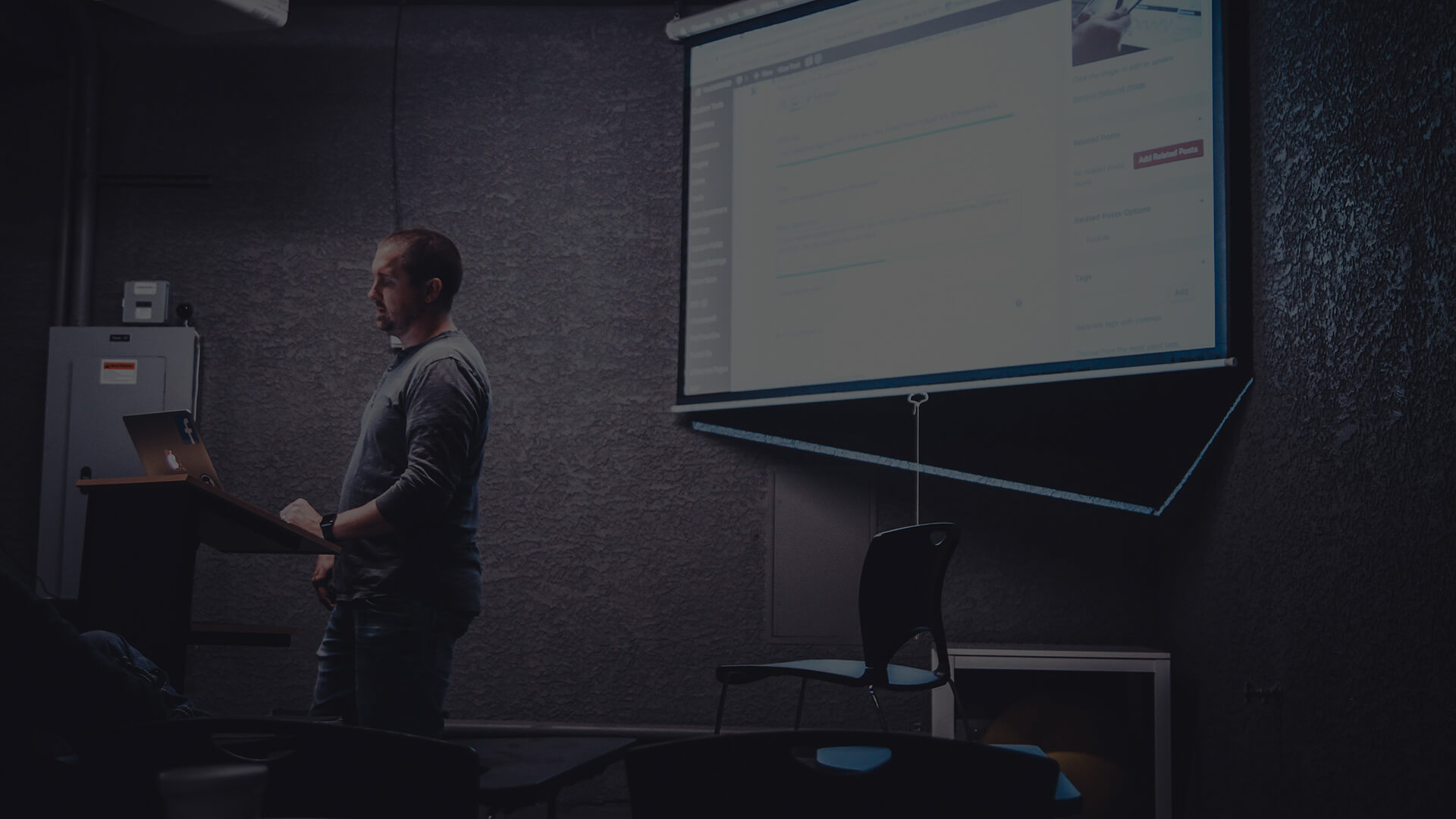

🎥 Recording

At Leboncoin, we have close to 200 kafka topics produced by micro-services covering all domains of the organisation (ad publication, ad validation, authentication, transactions, messaging...).

These topics are used by business intelligence, analytics, and machine learning teams, but first they need to be transfered to a more ""offline friendly"" storage with a proper query engine such as Spark or Athena.

Their schemas are also susceptible to evolve quite rapidly, beyond what the central data-engineering teams is able to cope with.

This talk will delve into the challenges and solutions we implemented to - using kafka connect - fully automate the process of discovering, normalizing, extracting, storing, and exposing those topics in our Hive metastore,without any human intervention along the process, to be enable greater agility for our downstream teams, and make the data engineering team less of a bottleneck when it comes to accessing data.